Announcing Mockery v3

Mockery v3 will make obsolete all other interface-based code generation frameworks that currently exist in the Go community. A bold statement you might say? Fortunately, I have quite the justification for this.

State of the Art¶

Let's recount the current state of code generators in the Go community, with a particular focus on ones that generate implementations of interfaces. Here are some of the notable examples I know about:

| URL | Description | Github Star Count |

|---|---|---|

| https://github.com/matryer/moq | Generates mock implementations of Go interfaces for use in tests. | 2.1k |

| https://github.com/uber-go/mock | Forked from https://github.com/golang/mock, this is also a mock generation framework that is popular in the Go community. | 2.7k |

| https://github.com/hexdigest/gowrap | Generates "decorators" for Go interfaces. You can generate things like Prometheus metric decorators that measure the time it took to call a method, logging decorators, rate limiters, timeouts... it's pretty neat! | 1.2k |

| https://github.com/maxbrunsfeld/counterfeiter | Generates test doubles for interfaces. | 1k |

| https://github.com/gojuno/minimock | Yet another mock generation tool! | 674 |

And on and on. In fact, awesome-go publishes a list just for mock generators. The point is that there are a lot of them and they all serve different use-cases and approach mocking, testing, and decorating from different angles. Clearly there's a lot of interest in code generators. The only problem is:

They Are All Slow As  ¶

¶

Let's do some tests with various projects to see how long generating a mock implementation of a single interface takes.

gowrap¶

Most of these tools let you generate any sort of interface you want whether it's in your own project, external projects on the interwebz, or even the standard library. Let's create implementations for io.Reader:

$ time gowrap gen -p io -i Reader -t ratelimit -o out.go

gowrap gen -p io -i Reader -t ratelimit -o out.go 0.37s user 0.59s system 60% cpu 1.578 total

Uh, wow! .59s for a single interface. That's kind of speedy right?

moq¶

Is moq any better?1

$ time moq -out out.go internal/fixtures/ Requester

moq -out out.go internal/fixtures/ Requester 0.20s user 0.50s system 123% cpu 0.571 total

Nope!

uber-go/mock¶

But wait, what about Uber? They must write good, performant code right? The project was even forked from Google itself!

$ time mockgen github.com/vektra/mockery/v3/internal/fixtures Requester >/dev/null

mockgen github.com/vektra/mockery/v3/internal/fixtures Requester > /dev/null 1.62s user 0.60s system 239% cpu 0.932 total

Ah dang, nope. It's still slow. In all transparency, these comparisons are not entirely fair because in the moq and uber-go/mock examples, I'm asking it to parse the syntax for the entire package instead of just single files. We can see if we modify the mockgen command to parse just a single file (which puts it into what they call "source" mode, as opposed to "package" mode):

$ time mockgen -source=./internal/fixtures/requester.go Requester >/dev/null

mockgen -source=./internal/fixtures/requester.go Requester > /dev/null 0.16s user 0.27s system 184% cpu 0.234 total

It's indeed faster, but not by a lot.

The //go:generate Workflow¶

Let's meander down into Mat Ryer's moq README.md. We see that the recommendation is to add a //go:generate directive next to the interface you want to generate mocks for like this:

package my

//go:generate moq -out myinterface_moq_test.go . MyInterface

type MyInterface interface {

Method1() error

Method2(i int)

}

//go:generate is a neat little tool introduced waaaay back in Go 1.4 that works like this:

- Place the

//go:generatedirective in your code with a command that you want to run. - At the top of your project, run

go generate ./.... - Go will then recursively look for all instances of this directive and run as a subprocess the command therein.

- The command runs, does what it does (which likely involves parsing your code's syntax tree) and outputting some kind of file, usually a

.gofile with real code in it.

You can read about this in the go.dev blog here. Anyone who has used go for any amount of time is likely already very familiar with this, so I'll spare you any further explanation of this.

What's Going On?¶

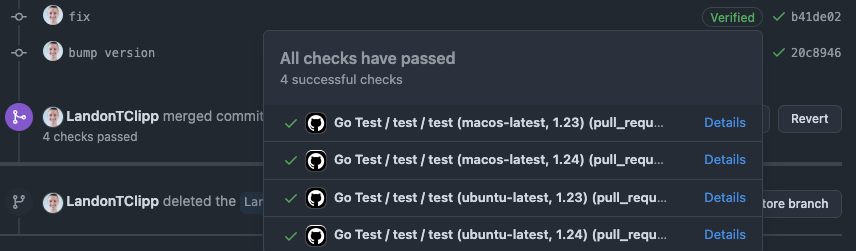

Given the buildup of what I've demonstrated, you might see where this is going: a proliferation of //go:generate directives that all take half a second to run. You can imagine if you have a project with dozens, even hundreds of interfaces, you're looking at a runtime of over... (consults calculator)... somewhere in the ballpark of 20 seconds to even over 100 seconds. That's crazy! Why does anyone subject themselves to this torture? How long must I stare at my GitHub CICD checks to display those sweet little green checkmarks we all know and love?

We're Parsing Syntax¶

As it turns out, the majority of time in all of these tools is spent on parsing syntax. More specifically, they spend most of their time on a call called packages.Load(). This function is the meat and potatoes of all projects that parse Go syntax. It's a package provided by the Go project itself that does the heavy lifting of parsing type information and unmarshalling it into easily digestible Go structs and methods and generally a structured Abstract Syntax Tree, as it's called amongst computer scientists (of which I am not one).

To get any use out of packages.Load(), you need to tell it that you want it to load:

- The list of files in each package.

- Plus its imports

- Plus type information

- Plus type-annotated syntax

- And the above for all its dependencies.

That number 5 is a real drag because almost every package is going to have some kind of external dependency, and to generate implementations of interfaces, you usually need to include those external types in your method signatures. So if we call //go:generate over and over and over again, we keep re-parsing the exact same dependencies every single time with no caching whatsoever! Wow!

So I had a novel thought: what if we cached that mofo?

The Solution¶

I did a little experiment with my very own code-generation project at https://github.com/vektra/mockery that also fell into this same pitfall of relying on //go:generate for everything. What if I collected the list of all packages I wanted to generate mocks for and passed that list into a single call of packages.Load()? Would it be smart enough to not re-parse the dependencies over and over again? It turns out the answer was emphatically YES. In a project with 105 interfaces, I reduced the runtime by about a factor of 5. After some further enhancements, I was able to get this down even further.

10x Performance Increase

Other testimonials from users of other code generation projects found around a whole gaht dang order of magnitude (~10) increases in performance after switching to mockery with its stance of "//go:generate sucks, don't use it".

I call my solution the packages: feature because the config looks like this:

packages:

github.com/user/repo1:

interfaces:

Foo:

Bar:

github.com/user/repo2:

interfaces:

Hello:

World:

What About v3?¶

The packages: config feature was introduced in v2 and has been around a while to great success, so what does that have to do with v3? Well, v2 was stuck only generating what I call "mockery-style" mocks. The project only made mocks in the way the mockery projects wants mocks to be made. What about all those other projects I listed up above? They still suffer from the same issue I solved and surely there is a lot of demand for faster mocks.

That's what v3 is.

Templates¶

I feel like Steve Ballmer when he got up on stage chanting "deVELoper deVELopers DEVELOPERS [sigh]... [pant]...". Except for me it's more like "TEMplates TEMplates TEMPLATES!"

Mockery's codebase in v1 was, how shall I say this lightly, an absolute nightmare. I actually inherited this project from another talented fellow, but after some historic cruft and missteps of my own, I created somewhat of a monster. Mockery did use templates, but they were scattered around in various pieces of the codebase and generally impossible to follow. v2 improved upon the ergonomics and performance, but it still suffered from this historical cruft that I had to support.

My first task in v3 was to unify these disparate templates into a single mockery.tmpl file. Once I did that, I thought to myself "huh, wouldn't it be nice if I ported the https://github.com/matryer/moq style of mocks into mockery's templating system? I tried it out and after some tinkering, it kind of just worked.

So then I thought to myself:2 why don't we just let mockery pull in any arbitrary template and give that template a defined function and data set it can use? There's no reason we can't allow this. And so, thus was born the mockery templating framework.

So then after that, I thought to myself: why does ANY code generation project exist anymore? We can port all of them to the mockery framework. I've solved the configuration and parsing problem. Like, I've done it. This is the solution. As far as I can tell, no one else has done this, at least not to any visible fanfare. gowrap is the closest project with its ability for its users to specify remote templates, but it still has performance problems.

The Case For Mockery¶

I hope by this point I've convinced you that mockery is the Go community's future for interface-based code generation. I've engineered v3 to empower template developers to maintain and distribute their own templates, rendered with mockery, out into the world. My intention is to subsume all the major projects I listed above into the gentle embrace of mockery's framework and make developer's lives... better? No that sounds too self-righteous. I guess, make your lives a little more pleasant and productive.

Anywho, give Mockery v3 a try and let me know your thoughts!

-

Note, I had to point

moqto a locally-hosted file instead of just specifyingio.Readerbecause it apparently only supports parsing syntax from local files. ↩ -

Actually this is a lie, I didn't think this to myself, it was explicitly suggested to me by @breml in https://github.com/vektra/mockery/discussions/715#discussioncomment-7106461. If you're reading this Lucas, THANK YOU by the way. Your insight steered this project in a wonderful direction. ↩